Research Projects

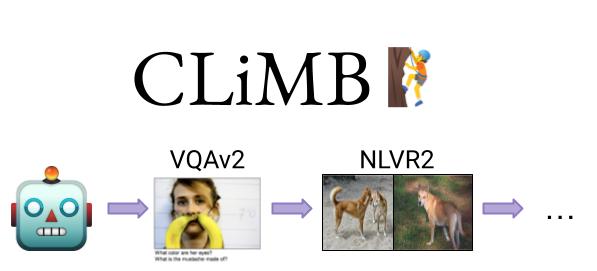

| CLiMB: The Continual Learning in Multimodality BenchmarkCollaborators: Ting-Yun Chang, Leticia Pinto AlvaAdvisors: Jesse Thomason, Mohammad Rostami CLiMB is a benchmark to study the novel challenge of learning vision-and-language tasks in a continual learning setting. |

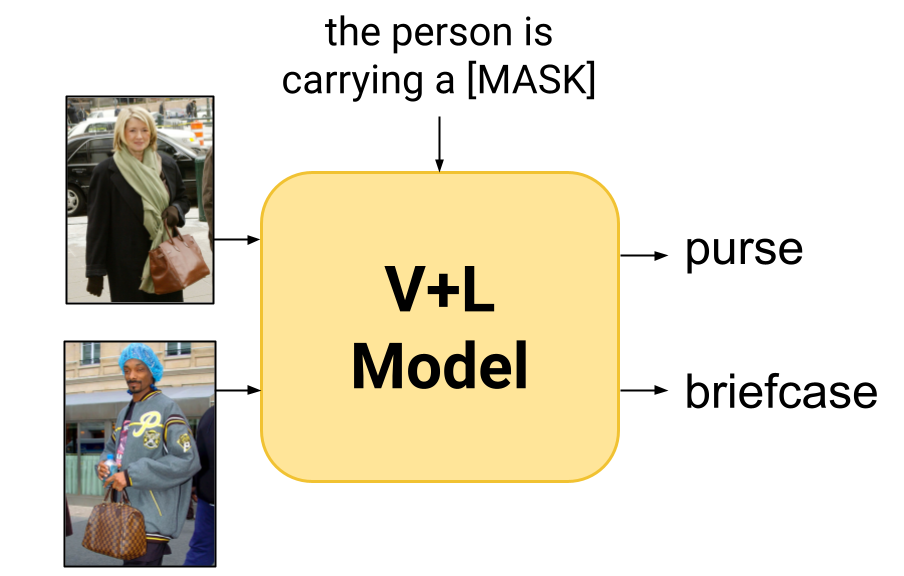

| Gender Bias in Pre-Trained Vision-and-Language ModelsAdvisor : Yonatan BiskWe analyze intra- and inter-modality gender biases encoded by pre-trained vision-and-language models, which often prefer to reinforce stereotypes over faithfully describing the visual scene. |

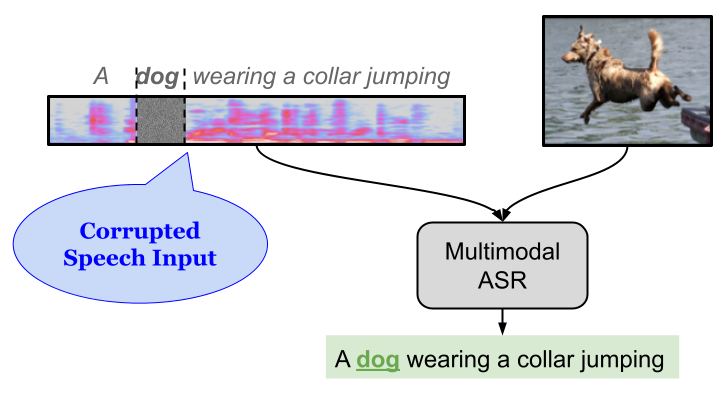

| Multimodal ASR for Recovering Noisy and Corrupted SpeechCollaborator : Ramon SanabriaAdvisors: Desmond Elliott, Florian Metze We investigate the utility of multimodal ASR under noisy conditions, showing that the visual context can be leveraged to recover masked words in the speech signal. |